AVX512 faster than the very best GPUs?

Moderators: Site Moderators, FAHC Science Team

AVX512 faster than the very best GPUs?

It looks like some new algorithm running on AVX512 can speed up certain AI tasks to the point of leaving in the dust even a V100. Would this be useful for FAH?

https://www.tomshardware.com/news/cpu-v ... imizations

https://techxplore.com/news/2021-04-ric ... odity.html

https://www.marktechpost.com/2021/04/10 ... -than-gpu/

https://www.tomshardware.com/news/cpu-v ... imizations

https://techxplore.com/news/2021-04-ric ... odity.html

https://www.marktechpost.com/2021/04/10 ... -than-gpu/

Re: AVX512 faster than the very best GPUs?

Don't know much about about Deep learning but given that said task requires huge amount of VRam and F@h barely uses any, i would guess that there aren't similarities between the two workloads.

GPU only

RTX 3060 12GB Gigabyte Gaming OC [currently mining]

Folding since 14/02/2021

-

JimboPalmer

- Posts: 2573

- Joined: Mon Feb 16, 2009 4:12 am

- Location: Greenwood MS USA

Re: AVX512 faster than the very best GPUs?

F@H uses a great deal of single precision floating point math (FP32) and a bit of double precision (FP64).

AVX256 can do 8 FP32 operations at once per thread, or 4 FP64 operations. (AVX2 allows us a Multiplication and an Addition at the same time on the same inputs, so if that is the math you need, 16 operations at once per thread)

AVX512 will be twice that.

In current Intel CPUs, the clock rate slows down during AVX512, to prevent overheating.

The very best GPUs will do over 5000 FP32s at once, again at lower clock rates.

AVX256 can do 8 FP32 operations at once per thread, or 4 FP64 operations. (AVX2 allows us a Multiplication and an Addition at the same time on the same inputs, so if that is the math you need, 16 operations at once per thread)

AVX512 will be twice that.

In current Intel CPUs, the clock rate slows down during AVX512, to prevent overheating.

The very best GPUs will do over 5000 FP32s at once, again at lower clock rates.

Tsar of all the Rushers

I tried to remain childlike, all I achieved was childish.

A friend to those who want no friends

I tried to remain childlike, all I achieved was childish.

A friend to those who want no friends

Re: AVX512 faster than the very best GPUs?

Well, let's say this is the math. So 16 ops per thread for AVX256. AVX512 doubles that, that is, 32 ops per thread. And many Intel CPUs have two AVX512 units, so 64 ops per thread.JimboPalmer wrote:F@H uses a great deal of single precision floating point math (FP32) and a bit of double precision (FP64).

AVX256 can do 8 FP32 operations at once per thread, or 4 FP64 operations. (AVX2 allows us a Multiplication and an Addition at the same time on the same inputs, so if that is the math you need, 16 operations at once per thread)

AVX512 will be twice that.

In current Intel CPUs, the clock rate slows down during AVX512, to prevent overheating.

The very best GPUs will do over 5000 FP32s at once, again at lower clock rates.

On a 10980XE, for example, I should get total 2304, that is about half a top tier GPU. And with my cooling solution, I doubt that the clock rate would drop. I'd take that!

Re: AVX512 faster than the very best GPUs?

Is it that linear? 512 bit does 16 threads of 32bit fpp?

Or is there overhead making it less than 16 threads?

The same theory could be said between 16 and 32 bit cpus, and 32 bit and 64 bit cpus.

However, 64 bit systems never ran twice as fast as 32 bit systems on a 32 bit OS.

Or is there overhead making it less than 16 threads?

The same theory could be said between 16 and 32 bit cpus, and 32 bit and 64 bit cpus.

However, 64 bit systems never ran twice as fast as 32 bit systems on a 32 bit OS.

-

JimboPalmer

- Posts: 2573

- Joined: Mon Feb 16, 2009 4:12 am

- Location: Greenwood MS USA

Re: AVX512 faster than the very best GPUs?

The clock rate decrease is built into the CPUs, it is preventative of overheating, not triggered by overheating.

https://travisdowns.github.io/blog/2020 ... freq1.html

2 AVX512 units will allow both threads in a core to execute at once, I am not sure you get to multiply by two there.

More impressive to me is AMD Epyc with 128 threads of AVX2 256, but to each his own.

https://travisdowns.github.io/blog/2020 ... freq1.html

2 AVX512 units will allow both threads in a core to execute at once, I am not sure you get to multiply by two there.

More impressive to me is AMD Epyc with 128 threads of AVX2 256, but to each his own.

Tsar of all the Rushers

I tried to remain childlike, all I achieved was childish.

A friend to those who want no friends

I tried to remain childlike, all I achieved was childish.

A friend to those who want no friends

Re: AVX512 faster than the very best GPUs?

While it is true that AVX512 approaches double the throughput of AVX256, it simply doesn't happen in real life. While "advertised" throughput numbers are technically maximums they don't work out in practice. GROMACS originally introduced support for AVX256 with a footnote saying they were not supporting AVX512 because it added very little to GROMACS throughput. (They eventually relented.)

AI throughput numbers have very little meaning in the FAH world. Ignore them.

AI throughput numbers have very little meaning in the FAH world. Ignore them.

Posting FAH's log:

How to provide enough info to get helpful support.

How to provide enough info to get helpful support.

Re: AVX512 faster than the very best GPUs?

So having talked to someone with extensive AVX 512 experience (a benchmark author I'm not naming because I haven't asked them), AVX512 has the following issues (paraphrased from memory so this isn't gospel):

- At 32x512, the register file alone is 16KB of space. This means that cache sizes and bandwidth can be very strained because the data vastly exceeds what caches are designed for. Rocket lake has 80KB per core but only 48KB of that is for data

- Intel's interconnect sucks, it just doesn't have anything close to the bandwidth of the AMD one right now.

- The clock speed hit is severe if you're not staying in AVX512 constantly. Mixed workloads are not recommended at all until intel figures this out. Running AVX2 even with that downclock can be advantageous if you really don't need with width.

- Workloads that stay almost wholly within the register file will vastly outperform almost anything else even with all those issues.

- It can be worth using AVX512 registers as if they were cache just to prevent hits to the cache and the consequence of that.

- Pretty much every compiler has serious issues with AVX512, either resulting in possible data corruption or just not saving registers properly in some cases.

Re: AVX512 faster than the very best GPUs?

You're basically saying that we're not there yet.mgetz wrote:So having talked to someone with extensive AVX 512 experience (a benchmark author I'm not naming because I haven't asked them), AVX512 has the following issues (paraphrased from memory so this isn't gospel):My personal observation is that for anything that needs extreme SIMD bandwidth but also memory GPUs will almost always win, the fact of the matter is that GDDR just has more bandwidth period. So can AVX512 get good results? Yes in some cases where it's worth it to be on the CPU. But honestly CUDA is actually easier to develop for IMO.

- At 32x512, the register file alone is 16KB of space. This means that cache sizes and bandwidth can be very strained because the data vastly exceeds what caches are designed for. Rocket lake has 80KB per core but only 48KB of that is for data

- Intel's interconnect sucks, it just doesn't have anything close to the bandwidth of the AMD one right now.

- The clock speed hit is severe if you're not staying in AVX512 constantly. Mixed workloads are not recommended at all until intel figures this out. Running AVX2 even with that downclock can be advantageous if you really don't need with width.

- Workloads that stay almost wholly within the register file will vastly outperform almost anything else even with all those issues.

- It can be worth using AVX512 registers as if they were cache just to prevent hits to the cache and the consequence of that.

- Pretty much every compiler has serious issues with AVX512, either resulting in possible data corruption or just not saving registers properly in some cases.

That it is possible to fully utilize the extensions, but that they can only be done in certain workloads.

Give it a few years, and all those issues will be sorted out, and become part of common knowledge.

Re: AVX512 faster than the very best GPUs?

Not quite, what I'm saying is Intel likes really good LinPack scores but for most reasonable use cases GPUs scale better because of memory bandwidth and interconnect. AVX512 is pretty much a GPU core, intel themselves took that tact with the knights series of CPUs. Basically the use cases fall into the following:MeeLee wrote: You're basically saying that we're not there yet.

That it is possible to fully utilize the extensions, but that they can only be done in certain workloads.

Give it a few years, and all those issues will be sorted out, and become part of common knowledge.

- You really like intel and hate GPUs (even intel ones)

- Your latency requirements are such that even the pState transitions triggered by AVX512 (and the latency that causes, which can be significant itself) are worth taking or are accounted or avoided by careful hand coding. You also assiduously avoid any serious need to go to memory.

- Your workload fits into cache/registers

- Your workload is tiled and you can't fit in the memory of a datacenter GPU

- You need extremely precise numerics that are extremely controllable down to the bit level, usually for verification of other workloads

Skylake X was basically built entirely for the HFT community because their use case actually fits within all of those parameters. Extremely low latency, models that fit in L1/L2 cache etc. But for scientific computing or AI GPUs have largely been delivering just because even if they lack some features (features that are in the latest revs of NVidia and AMD cards last I checked) they just have the raw brute force to muscle through it.

So honestly? I agree with Linus Torvalds... AVX512 is a waste of die space. It could have been much better spent on larger caches or other things that have a much bigger effect on real world workloads than new instructions do. Intel should have just released it as a DSP unlisted chip for the HFT folks. This isn't a "few years" sort of thing... this is a "will probably be removed" sort of thing as intel shifts this to their GPUs.

-

JimboPalmer

- Posts: 2573

- Joined: Mon Feb 16, 2009 4:12 am

- Location: Greenwood MS USA

Re: AVX512 faster than the very best GPUs?

My concerns are as a Programmer. Every version uses a slightly different implementation of AVX-512, so you cannot trust your code will run without exhaustive checks. IF AMD impements it at 5 nm because they have room to spare, I would hope THAT version become the new standard.

https://en.wikipedia.org/wiki/AVX-512#CPUs_with_AVX-512

AVX-512 is rare, having 9 flavors of rare is useless. If AMD puts just one version on every Zen 4 CPU, that solves my issues.

https://en.wikipedia.org/wiki/AVX-512#CPUs_with_AVX-512

AVX-512 is rare, having 9 flavors of rare is useless. If AMD puts just one version on every Zen 4 CPU, that solves my issues.

Tsar of all the Rushers

I tried to remain childlike, all I achieved was childish.

A friend to those who want no friends

I tried to remain childlike, all I achieved was childish.

A friend to those who want no friends

Re: AVX512 faster than the very best GPUs?

Based on the rumors I've heard... AMD is in no rush. AVX512 requires a lot of die area. Given the MCM approach they've taken that die area is much better used for cache/interconnect which hides the (comparatively) atrocious memory latency Zen2/3 have to intel. They may reevaluate when the MCM approach is more mature but for now I think they want to focus on basically making the fact that there are multiple CCDs completely transparent to the dev. Unfortunately that's not the case at the moment and Devs writing for TR or the *900X or *950X have to be aware and 'group' threads together by L3 cache blocks.JimboPalmer wrote:My concerns are as a Programmer. Every version uses a slightly different implementation of AVX-512, so you cannot trust your code will run without exhaustive checks. IF AMD impements it at 5 nm because they have room to spare, I would hope THAT version become the new standard.

https://en.wikipedia.org/wiki/AVX-512#CPUs_with_AVX-512

AVX-512 is rare, having 9 flavors of rare is useless. If AMD puts just one version on every Zen 4 CPU, that solves my issues.

https://en.wikichip.org/wiki/amd/microarchitectures/zen

Note how decreased latency is an explicit goal for Zen 4:

https://en.wikichip.org/wiki/amd/microa ... ures/zen_4

Technically a similar issue exists in Intel's higher core count CPUs based on which interconnect bridge location the core is tied to (IIRC there are two on Skylake) where the standard strategy is to basically split the CPU into two effective NUMA 'nodes' due to the core to core cache latency.

https://en.wikichip.org/wiki/intel/micr ... ke_(server)

It's also worth noting that that die shrinks only do so much, in many cases CPUs are literally running up against the speed of light in terms of latency issues. You can decrease that with things like stacking dies etc. But at some point the thermal dissipation limits of silicon become a limiter. So my guess for what it's worth is we'll see a 'loose' execution mode introduced into X86 much like ARM has by default (and the M1 can turn off), this allows for wider internal pipes in the cores without wasting die area on massive register files because you can reorder instructions more aggressively. Which can massively speed things up a lower clocks.

Re: AVX512 faster than the very best GPUs?

Yeah, AVX512 probably has tons of memory latency compared to modern GPUs.

They'll work fine as long as there's enough L Cache. But L-cache consumes a lot of power, so most CPUs have a very limited amount of that.

Plus, the X86 architecture is seriously memory bottlenecked, unlike ARM technology.

I can't wait until fully working GPU compute modules like these are coming out instead of regular GPUs:

They'll work fine as long as there's enough L Cache. But L-cache consumes a lot of power, so most CPUs have a very limited amount of that.

Plus, the X86 architecture is seriously memory bottlenecked, unlike ARM technology.

I can't wait until fully working GPU compute modules like these are coming out instead of regular GPUs:

Last edited by Joe_H on Sat Apr 24, 2021 5:13 pm, edited 1 time in total.

Reason: corrected image link - no .webp

Reason: corrected image link - no .webp

Re: AVX512 faster than the very best GPUs?

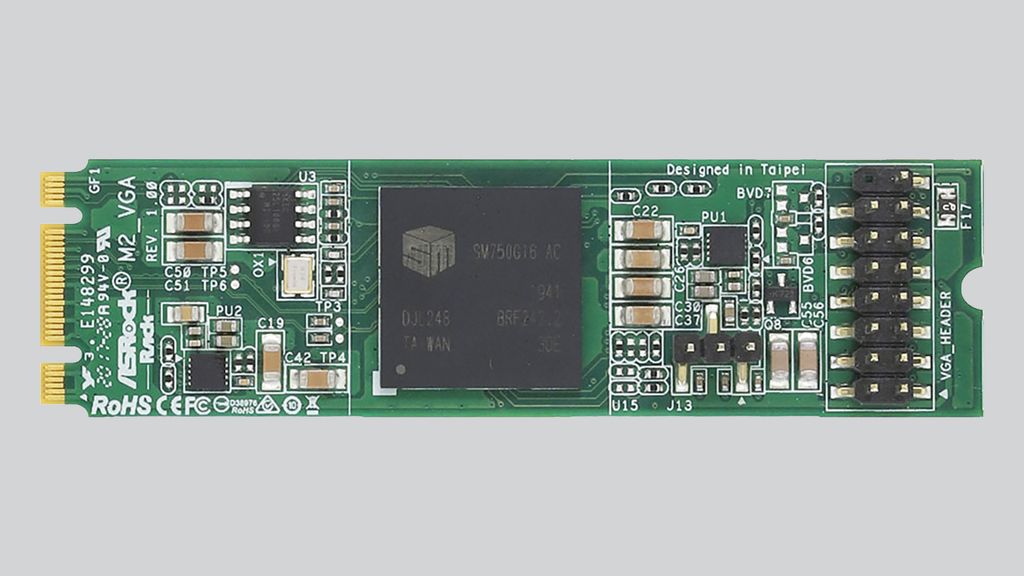

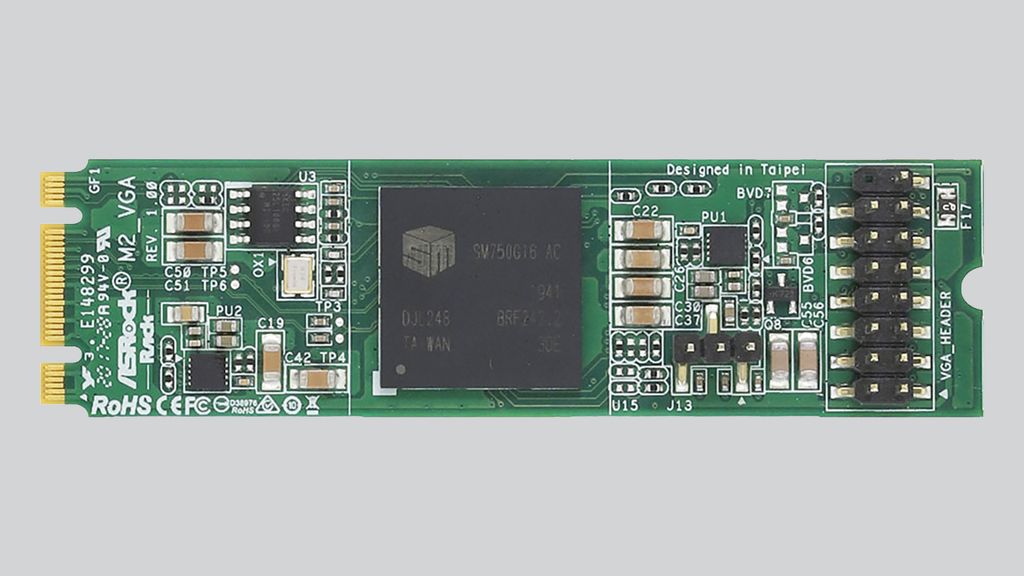

https://www.tomshardware.com/news/asroc ... phics-cardMeeLee wrote:I can't wait until fully working GPU compute modules like these are coming out instead of regular GPUs:

The ASRock M_2 VGA module uses Silicon Motion's SM750 display controller with 16MB of embedded memory and a PCIe x1 interface. The card has a 15-pin header to connect a D-Sub output that supports resolutions of up to 1920×1440.

The Silicon Motion SM750 is a rather simplistic display controller that only supports 2D graphics and a basic video engine. The chip features two display engines and has two 300 MHz RAMDACs, one TMDS transmitter, and one LVDS transmitter.

Given the measly specs of said m.2 graphics card, it could be a long while.

GPU only

RTX 3060 12GB Gigabyte Gaming OC [currently mining]

Folding since 14/02/2021

Re: AVX512 faster than the very best GPUs?

I want to correct this because I see this and people don't understand that ARM implementations don't inherently have better memory bandwidth. In fact many have worse. What has happened is that several implementations of ARM64 have chosen to do high bandwidth implementations that are closer to GDDR than normal DDR. FWIW DDR5 has amazing bandwidth, but the ability size your RAM to your use case comes at the cost of a fixed pipe with to that RAM. So it's all about trade offs. Moreover I also want to say that GPU memory latency can actually be worse, again... higher bandwidth higher latency is often the name of the game.MeeLee wrote:Yeah, AVX512 probably has tons of memory latency compared to modern GPUs.

They'll work fine as long as there's enough L Cache. But L-cache consumes a lot of power, so most CPUs have a very limited amount of that.

Plus, the X86 architecture is seriously memory bottlenecked, unlike ARM technology.